楼主 # 2024-10-22 21:20:39 分享评论

- 天岦

- 会员

- 所在地: 湖北武汉

- 注册时间: 2020-03-30

- 已发帖子: 37

- 积分: 40

c++ 11 编译代码 条件变量阻塞状态 wait失效

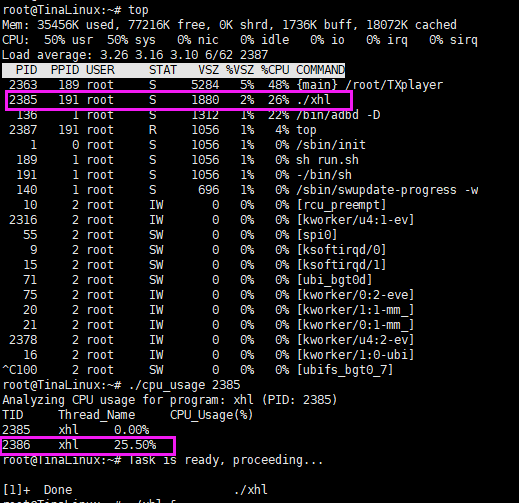

基于全志T113-s3硬件平台,使用condition_variable 条件变量时候调用wait阻塞等待变量释放,但是再等待通知期间,cpu并不会释放,而是一直保持占用状态。难以理解。

以下是测试代码:

#include <iostream>

#include <thread>

#include <mutex>

#include <condition_variable>

std::mutex mtx;

std::condition_variable cond_not_empty_;

bool ready = false;

void wait_for_task() {

std::unique_lock<std::mutex> lock(mtx);

// 等待直到 ready 为 true

cond_not_empty_.wait(lock, []{ return ready; });

std::cout << "Task is ready, proceeding...\n";

}

void signal_task() {

std::lock_guard<std::mutex> lock(mtx);

ready = true;

// 唤醒等待的线程

cond_not_empty_.notify_one();

}

int main() {

std::thread worker(wait_for_task);

// 模拟其他工作

std::this_thread::sleep_for(std::chrono::seconds(20));

signal_task(); // 通知任务已准备好

worker.join();

return 0;

}以下是执行完代码处理后得结果,主线程休眠装台0占用,worker线程占用cpu再25%:

离线

楼主 #1 2024-10-22 21:44:03 分享评论

- 天岦

- 会员

- 所在地: 湖北武汉

- 注册时间: 2020-03-30

- 已发帖子: 37

- 积分: 40

Re: c++ 11 编译代码 条件变量阻塞状态 wait失效

同样得代码再服务器上跑没啥问题,两个线程得占用都是0,奇奇怪怪得。

t113@t113-docker-1:~/t113/cpu_usage/build$ ./waitT &

[2] 9925

t113@t113-docker-1:~/t113/cpu_usage/build$ ./cpu_usage 9925

Analyzing CPU usage for program: waitT (PID: 9925)

TID Thread_Name CPU_Usage(%)

9925 waitT 0.00%

9926 waitT 0.00%

t113@t113-docker-1:~/t113/cpu_usage/build$ Task is ready, proceeding...离线

楼主 #2 2024-10-23 00:55:48 分享评论

- 天岦

- 会员

- 所在地: 湖北武汉

- 注册时间: 2020-03-30

- 已发帖子: 37

- 积分: 40

Re: c++ 11 编译代码 条件变量阻塞状态 wait失效

感觉应该是编译器问题或者是我的linux线程调度问题,目前采用了一种十分呆瓜的方案,使用while循环,然后再循环中使用sleep来释放cpu,目前能够有效的降低线程中的cpu占用。但是问题依旧存在。

root@TinaLinux:~# ./cpu_usage 312

Analyzing CPU usage for program: main (PID: 312)

TID Thread_Name CPU_Usage(%)

312 main 0.00%

313 scan_key 0.00%

314 mount_check 0.00%

315 read_rs485 7.50%

316 din_interrupt 0.00%

317 addr_led_blink 0.00%

318 addr_led_blink 0.00%

319 mixing 0.00%

325 send_codec 30.00%

326 send_spdif 35.50%

root@TinaLinux:~# kill -9 312

root@TinaLinux:~# Killed

TXplayer has stopped. Restarting in 5 seconds...

TXplayer lost executable permission. Adding executable permission again...

Thread Name: main, TID: 332

[ 1542.112483] sw_uart_check_baudset()903 - uart1, baud 500000 beyond rance

Thread Name: mount_check, TID: 334

Thread Name: scan_key, TID: 333

Thread Name: mixing, TID: 339

Thread Name: addr_led_blink, TID: 337

Thread Name: read_rs485, TID: 335

Thread Name: din_interrupt, TID: 336

Thread Name: addr_led_blink, TID: 338

[ 1542.149690] [SNDCODEC][sunxi_card_hw_params][620]:stream_flag: 0

[ 1542.374365] sunxi-spdif 2036000.spdif: active: 1

Thread Name: send_codec, TID: 345

Thread Name: send_spdif, TID: 346

root@TinaLinux:~# ./cpu_usage 332

Analyzing CPU usage for program: main (PID: 332)

TID Thread_Name CPU_Usage(%)

332 main 0.00%

333 scan_key 0.00%

334 mount_check 0.00%

335 read_rs485 7.50%

336 din_interrupt 0.00%

337 addr_led_blink 0.00%

338 addr_led_blink 0.00%

339 mixing 0.00%

345 send_codec 0.00%

346 send_spdif 1.00%离线

楼主 #4 2024-10-24 15:08:38 分享评论

- 天岦

- 会员

- 所在地: 湖北武汉

- 注册时间: 2020-03-30

- 已发帖子: 37

- 积分: 40

Re: c++ 11 编译代码 条件变量阻塞状态 wait失效

更换了交叉编译器,重新编译程序,CPU占用问题依旧没得到解决,目前推测应该是tina linux问题

离线

楼主 #5 2024-10-24 15:16:09 分享评论

- 天岦

- 会员

- 所在地: 湖北武汉

- 注册时间: 2020-03-30

- 已发帖子: 37

- 积分: 40

Re: c++ 11 编译代码 条件变量阻塞状态 wait失效

目前测试,证实确实是tina linux的问题导致的,更换镜像重新烧录后,执行wait线程挂起,cpu消耗为0,测试正常,目前已经修改了SDK重新编译系统(PS:来路不明的野SDK最好不要用,否则掉到坑里很难爬出来)

root@TinaLinux:~# ./xhl &

root@TinaLinux:~# top

Mem: 18828K used, 93844K free, 0K shrd, 1248K buff, 2716K cached

CPU: 0% usr 4% sys 0% nic 95% idle 0% io 0% irq 0% sirq

Load average: 0.00 0.00 0.00 1/55 225

PID PPID USER STAT VSZ %VSZ %CPU COMMAND

223 150 root S 1880 2% 0% ./xhl

143 1 root S 1312 1% 0% /bin/adbd -D

1 0 root S 1056 1% 0% /sbin/init

150 1 root S 1056 1% 0% -/bin/sh

225 150 root R 1056 1% 0% top

141 1 root S 696 1% 0% /sbin/swupdate-progress -w

55 2 root SW 0 0% 0% [spi0]

10 2 root IW 0 0% 0% [rcu_preempt]

21 2 root IW 0 0% 0% [kworker/1:1-ubi]

53 2 root IW 0 0% 0% [kworker/u4:1-ev]

7 2 root IW 0 0% 0% [kworker/u4:0-ev]

5 2 root IW 0 0% 0% [kworker/0:0-mm_]

15 2 root SW 0 0% 0% [ksoftirqd/1]

73 2 root IW 0 0% 0% [kworker/1:2-ubi]

20 2 root IW 0 0% 0% [kworker/0:1-ubi]

9 2 root SW 0 0% 0% [ksoftirqd/0]

103 2 root SW 0 0% 0% [ubifs_bgt0_7]

16 2 root IW 0 0% 0% [kworker/1:0-mm_]

19 2 root SW 0 0% 0% [rcu_tasks_kthre]

^C 17 2 root IW< 0 0% 0% [kworker/1:0H-kb]

root@TinaLinux:~# ./cpu_usage 223

Analyzing CPU usage for program: xhl (PID: 223)

TID Thread_Name CPU_Usage(%)

223 xhl 0.00%

224 xhl 0.00%

root@TinaLinux:~# Task is ready, proceeding...最近编辑记录 天岦 (2024-10-24 15:16:30)

离线

楼主 #7 2024-10-24 15:44:22 分享评论

- 天岦

- 会员

- 所在地: 湖北武汉

- 注册时间: 2020-03-30

- 已发帖子: 37

- 积分: 40

Re: c++ 11 编译代码 条件变量阻塞状态 wait失效

海石生风 说:

既然怀疑编译器。那它啥版本?哪来的?换过编译器没有?

代码验证没有问题,接下来当然就是排查编译器和系统问题咯,就是换过编译器了,才有下面的系统问题排查

离线

感谢为中文互联网持续输出优质内容的各位老铁们。

QQ: 516333132, 微信(wechat): whycan_cn (哇酷网/挖坑网/填坑网) service@whycan.cn